What is Security Token Service (STS) in Red Hat OpenShift Service on AWS?

After reading this resource, you will know:

- The two main deployment options: with STS or with identity and access management (IAM) users

- The differences between them

- Why STS is more secure and is the preferred option

- How Red Hat® OpenShift® Service on AWS (ROSA) with STS works

What are the different "options" (credential methods)?

Since ROSA is a service that needs to manage infrastructure resources in your AWS account, the question is “how do you grant Red Hat the permissions needed in order to perform the required actions in your AWS account?”

In the documentation, you might have seen references to deploying a cluster or deploying it “with STS” and wondered what the differences are.

There are currently two supported methods for granting those permissions:

- Using static IAM user credentials with AdministratorAccess policy - “with IAM Users” (not recommended)

- Using AWS STS with short-lived, dynamic tokens (preferred)

When ROSA was first released there was only one method, the option with IAM users. This method requires an IAM user with the AdministratorAccess policy (limited to the AWS account using ROSA), which allows the installer to create all the resources it needs, and allows the cluster to create/expand its own credentials as needed.

Since then, we have been able to improve the service to implement security best practices and introduce a method that utilizes AWS STS. The STS method uses predefined roles and policies to grant the AWS account service the minimal permissions needed (least-privilege) in order to create and operate the cluster.

While both methods are currently enabled, the “with STS” method is the preferred and recommended option.

What is AWS Security Token Service (STS)?

As stated in the AWS documentation, AWS STS “enables you to request temporary, limited-privilege credentials for AWS identity and access management (IAM) users or for users you authenticate (federated users).”

See this video with the full transcript here.

In this case AWS STS can be used to grant the ROSA service limited, short term access to resources in your AWS account. After these credentials expire (typically an hour after being requested), they are no longer recognized by AWS and no longer have any kind of account access from API requests made with them.

What makes this more secure?

- Explicit, limited set of roles and policies - the user creates them ahead of time and knows exactly every permission asked for and every role used.

- The service cannot do anything outside of those permissions.

- Whenever the service needs to perform an action it obtains credentials that will expire in at most 1 hour, meaning there is no need to rotate or revoke credentials. Their expiration reduces the risks of credentials leaking and being reused.

For more information on security with ROSA, view the Red Hat OpenShift Service on AWS security FAQ.

How does ROSA use STS?

ROSA uses STS to grant permissions defined as least-privilege policies to specific and segregated IAM roles with short-term security credentials. These credentials are associated to the IAM roles that are specific to each component and cluster that makes AWS API calls.

This better aligns with principles of least-privilege and is much better aligned to secure practices in cloud service resource management.

See this video with the full transcript here.

The CLI tool manages the STS roles and policies that are assigned for unique tasks and takes action upon AWS resources as part of OpenShift functionality. One drawback of using STS is that roles and policies must be created for each cluster.

To make this even easier, the installation tools provide all the commands and files needed to create them, as well as an option to allow the CLI to perform the creation of these roles and policies automatically using automatic mode. Please see the Red Hat documentation for more information about the different --mode options.

What components are specific to ROSA with STS?

Components include:

- AWS Infrastructure - This provides the infrastructure required for the cluster. This will contain the actual EC2 instances, storage, and networking components. You can review AWS compute types to see supported instance types for compute nodes and the provisioned AWS infrastructure section for the control plane and infrastructure nodes configuration.

- AWS STS - see the above section “What is AWS STS?”

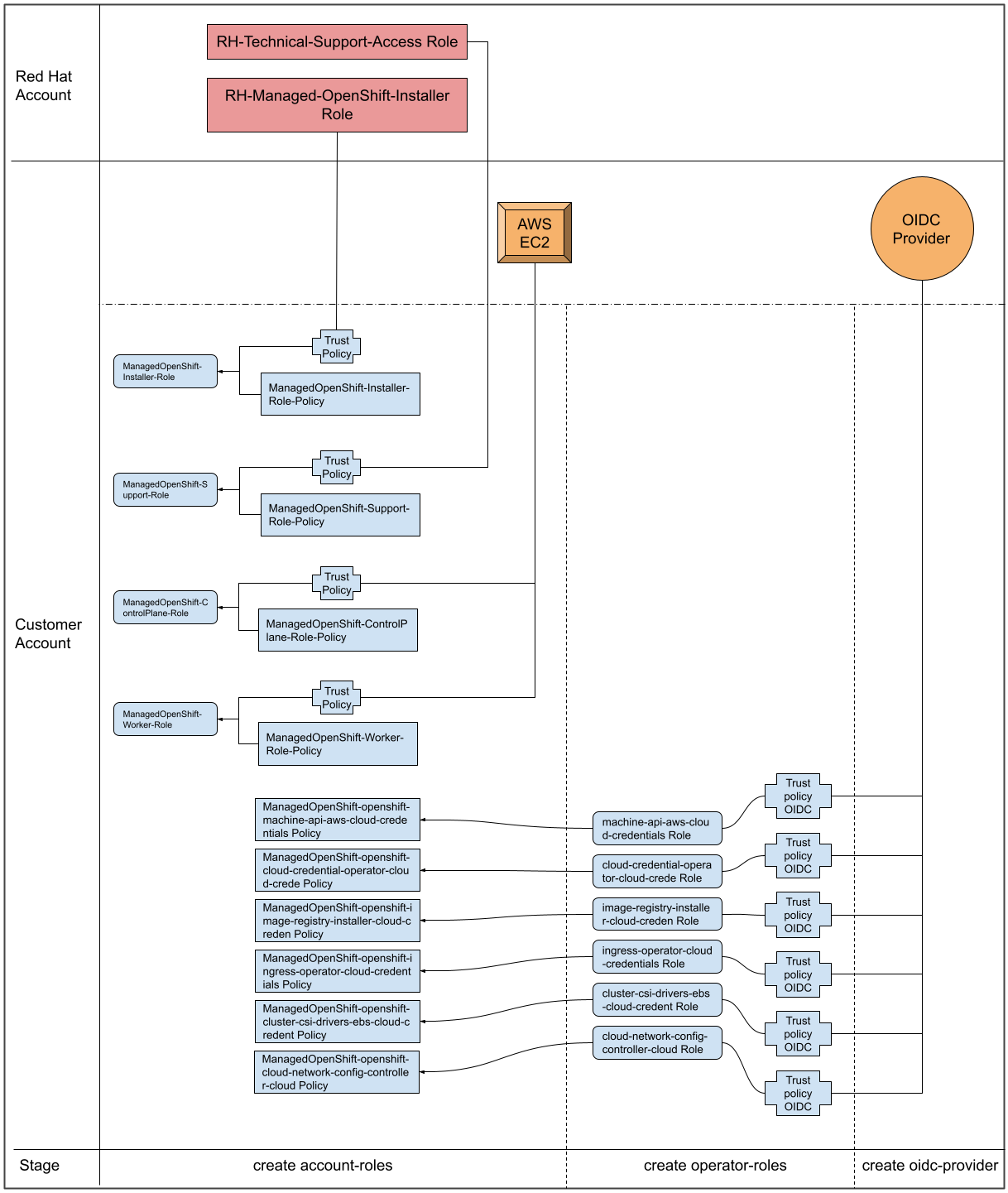

- Roles and policies - It could be argued that this is the main component that differentiates the “with STS” method from the “with IAM users” method. The roles and policies are broken up into account-wide roles and policies, and operator roles and policies. The policies determine the allowed actions for each of the roles (permissions policies). Policies b.v through b.x below will be used by the cluster operator roles (in part c.), which are created in a second step (since they are dependent on an existing cluster name which is why they are not created at the same time as the account-wide roles). Please see the documentation for more details about the individual roles and policies.

- The account-wide roles are:

- ManagedOpenShift-Installer-Role

- ManagedOpenShift-ControlPlane-Role

- ManagedOpenShift-Worker-Role

- ManagedOpenShift-Support-Role

- The account-wide policies are:

- ManagedOpenShift-Installer-Role-Policy

- ManagedOpenShift-ControlPlane-Role-Policy

- ManagedOpenShift-Worker-Role-Policy

- ManagedOpenShift-Support-Role-Policy

- ManagedOpenShift-openshift-ingress-operator-cloud-credentials

- ManagedOpenShift-openshift-cluster-csi-drivers-ebs-cloud-credent

- ManagedOpenShift-openshift-cloud-network-config-controller-cloud

- ManagedOpenShift-openshift-machine-api-aws-cloud-credentials

- ManagedOpenShift-openshift-cloud-credential-operator-cloud-crede

- ManagedOpenShift-openshift-image-registry-installer-cloud-creden

- The operator roles are:

- <cluster-name>-xxxx-openshift-cluster-csi-drivers-ebs-cloud-credent

- <cluster-name>-xxxx-openshift-cloud-network-config-controller-cloud

- <cluster-name>-xxxx-openshift-machine-api-aws-cloud-credentials

- <cluster-name>-xxxx-openshift-cloud-credential-operator-cloud-crede

- <cluster-name>-xxxx-openshift-image-registry-installer-cloud-creden

- <cluster-name>-xxxx-openshift-ingress-operator-cloud-credentials

- Trust policies are created for each account-wide and operator role.

- The account-wide roles are:

- OIDC - Provides a mechanism for cluster operators to authenticate with AWS in order to assume the cluster roles (via a trust policy) and obtain temporary credentials from STS in order to make the required API calls.

What is the process for deploying a cluster that uses STS?

The following is the general process for deploying a cluster using STS:

- Create the account-wide roles and policies.

- Assign the permissions policy to the corresponding role.

- Create the cluster.

- Create the operator roles.

- Assign the permission policy to the corresponding operator roles.

- Create the OIDC provider.

- Cluster is created. Done.

Please don’t let these steps intimidate you. You are not expected to create these resources from scratch.

The CLI will create the required JSON files for you, and will output the commands you need to run. The CLI can even take this a step further and do it all for you, if desired. Roles and policies can also be created automatically by the CLI, or they can be manually created by utilizing the --mode manual or --mode auto flags in the CLI. See the documentation for details about the modes, or see the Deploy the cluster section in this learning as well.

How does ROSA with STS work?

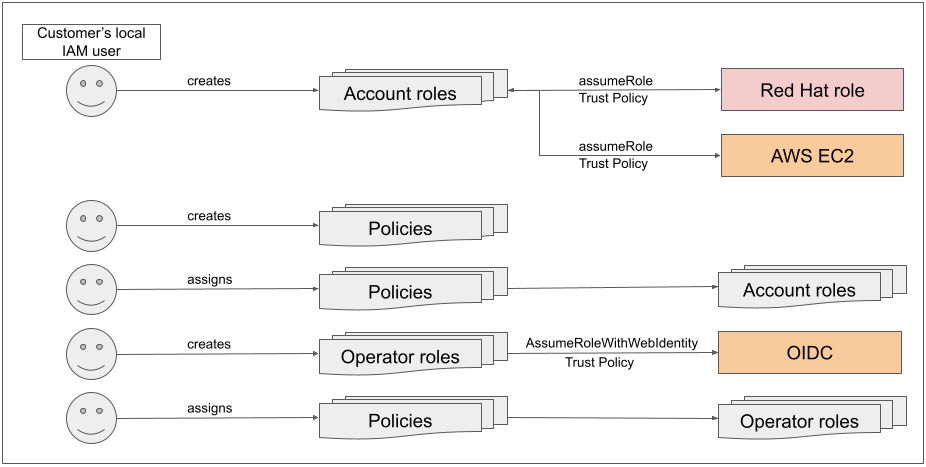

To get started, the user creates the required account-wide roles and account-wide policies using their local AWS user. As a part of creating these roles, a trust policy is created, which allows a Red Hat owned role to assume these roles (cross-account trust policy).

Trust policies will also be created for the EC2 service (allowing workloads on EC2 instances to assume roles and get credentials). Then the user assigns to each role its corresponding permissions policy.

After the account-wide roles and policies are created, we can proceed with creating the cluster.

Once we initiate cluster creation, we can create the operator related roles, so that the operators on the cluster can make AWS API calls. These roles are then assigned to their corresponding permission policies that were created earlier, along with a trust policy to an OIDC provider.

These roles are a bit different from the account-wide roles in that they represent the operators on the cluster (which ultimately are pods) that need access to AWS resources. We cannot attach IAM roles to pods, so we now need to create a trust policy with an OIDC provider so that the pods (comprising the operator) can have access to the roles they need.

See this video with the full transcript here.

Once we’ve assigned the roles to the corresponding policy permissions, the final step is to create the OIDC provider.

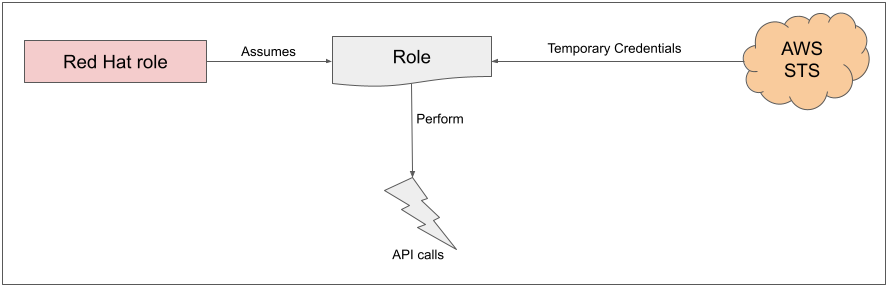

After this process has completed, when a role is needed, the workload, currently using the Red Hat role, will assume the role in the AWS account, obtain temporary credentials from AWS STS, and begin performing the actions (via API calls) within the customer's AWS account, as permitted by the assumed role’s permissions policy.

It should be noted that these credentials are temporary and have a maximum duration of 1 hour.

Placing this all together we can see the following flow and relationships:

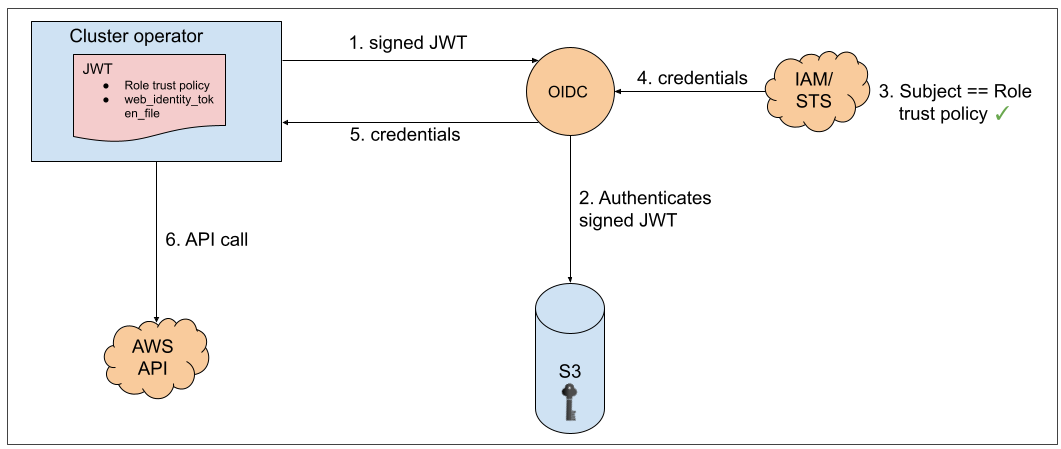

Operators in the cluster use the following process to get credentials in order to perform their required tasks. As we saw above, each operator has an operator role, a permissions policy, and a trust policy with an OIDC provider. The operator will assume-role by passing a JWT that contains the role and a token file (web_identity_token_file) to the OIDC provider, which then authenticates the signed key with a public key (created at cluster creation time and was stored in an S3 bucket).

It will then confirm that the subject in the signed token file that was sent matches the role in the role trust policy (this way we ensure that you can get only the role allowed). Then it will return the temporary credentials to the operator so that it can make AWS API calls.

Here is an illustration of this concept:

To illustrate how this works, let's take a look at two examples:

- How nodes are created at cluster install - The installer, using the RH-Managed-OpenShift-Installer role would assume the role of Managed-OpenShift-Installer-Role within the customers account (via the trust policy), which returns temporary credentials from AWS STS. Red Hat (the installer) would then begin making the required API calls (with the temporary credentials just received from STS) to create the infrastructure required in AWS. The same process would apply for support. Just in this case there would be an SRE instead of the installer.

- Scaling out the cluster - the machine-api-operator will assume-role (AssumeRoleWithWebIdentity) for the machine-api-aws-cloud-credentials role. This would launch the sequence described above for the cluster operators and then it would receive the credentials. The machine-api-operator can now make the relevant API calls to add more EC2 instances to the cluster.

Now that you know these key concepts, you are ready to deploy a cluster.