Patch token-refresher to use a cluster proxy

This content is authored by Red Hat experts, but has not yet been tested on every supported configuration.

Currently, if you deploy a ROSA or OSD cluster with a proxy, the token-refresher pod in the openshift-monitoring namespace will be in crashloopbackoff. There is an RFE open to resolve this, but until then this can affect the ability of the cluster to report telemetry and potentially update. This article provides a workaround on how to patch the token-refresher deployment until that RFE has been fixed using the patch-operator.

Prerequisites

A logged in user with

cluster-adminrights to a ROSA or OSD Cluster deployed using a cluster wide proxyYou can use the

--http-proxy,--https-proxy,--no-proxyand--additional-trust-bundle-filearguments to configure a ROSA cluster to use a proxy.

Problem Demo

Check your pods in the openshift-monitoring namespace

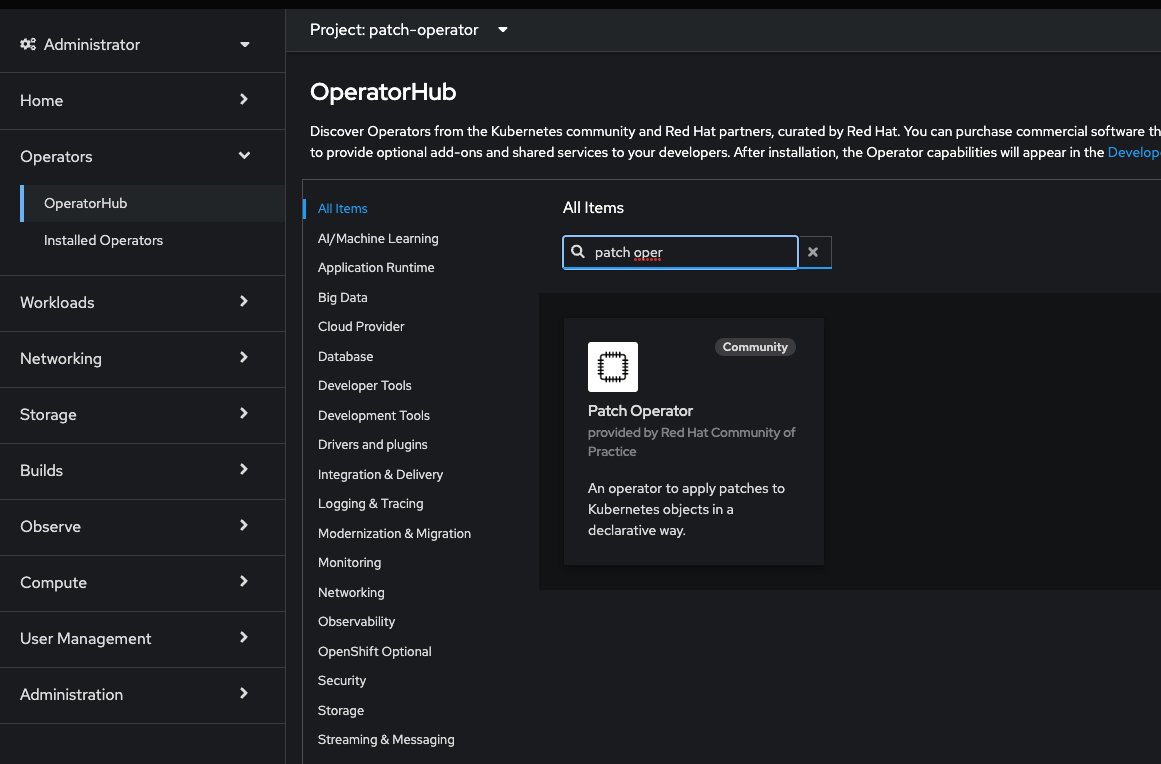

Install the patch-operator from the console

Log into your cluster as a user able to install Operators and browse to Operator Hub

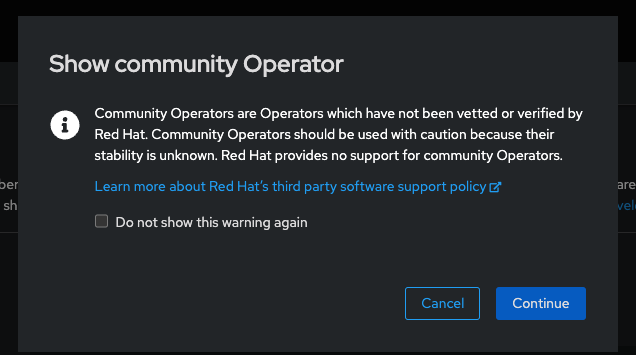

Accept the warning about community operators

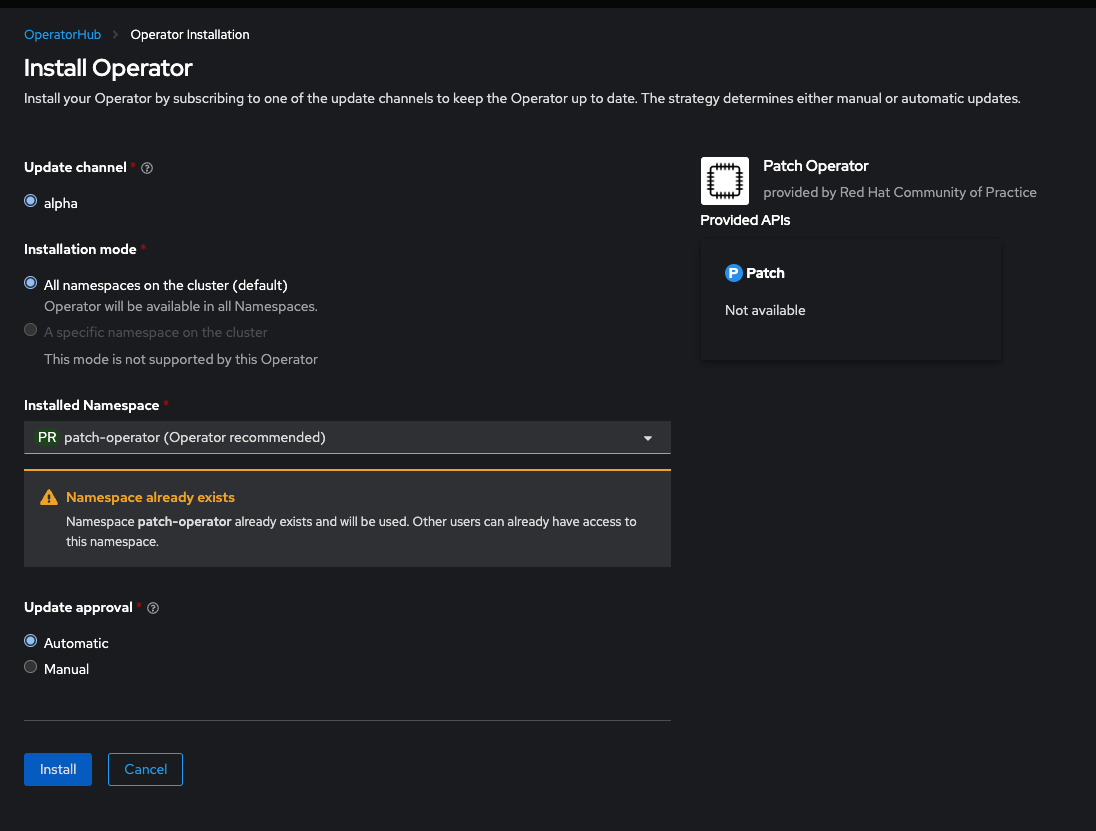

Install the Patch Operator

Create the patch resource

Create the service account, cluster role, and cluster role binding required for the patch operator to access the resources to be patched.

Create the patch Custom Resource for the operator

Note: This tells the Patch operator to fetch proxy configuration from the cluster and patch them into the token-refresher Deployment.